In this article

A Developer’s Guide to Analytics Implementation & Testing

Data drives decisions and growth, and is an important part of the development process - whether we like it or not. Creating standards and best practices around data will save developers time, and ease frustration around implementing analytics. We'll discuss five best practices developers can utilize to integrate analytics implementation and testing processes into their workflow.

When working with data teams, spending chunks of our time chasing and squashing data bugs is no one's idea of a good time. Those bugs can have a huge impact on the whole product, even if they often seem insignificant, from a development perspective.

We spend so much time bug-squashing because we often find ourselves put in a reactive position by mediocre data management practices. It would remove huge time-wasters for everyone if we could proactively act on data quality. One way to achieve that is becoming a data stakeholder, a role that guides better workflows from the outset. Eradicating trivial data-quality issues allows all engineers on the team to spend less time chasing frustrating bugs and more time building worthwhile code.

We should follow implementation best practices; test and validate our implementation to make sure our code functions correctly, and our data is clean. This helps avoid rework down the road. So, if we’d rather create exciting new features than spend our time dealing with bad data and do-overs, here are the best practices for analytics implementation and testing to follow.

Caring about data analytics implementation and testing best practices saves developers time and frustration

When we care about analytics implementation and validation best practices, it prevents bad-data-mitigating rework later on. This saves a huge number of engineering hours that get wasted tracking down the error later — not to mention the frustration in trying to find what went wrong. Other parts of the company might also be waiting for corrected insights to make clear decisions, and they’re getting held up as well.

One of Avo’s customers is realistically expecting to cut engineering hours spent on implementing analytics by 80%, and that time-saving largely comes from not having to chase down these bad data-bugs anymore. This makes everyone happier — from the developer implementing tracking code to the product manager analyzing the data to the CEO making data based decisions.

Reworking represents a huge waste of time. Kirill Yakovenko, product manager at Termius, knows this well. Termius’ process for dealing with bad data was laborious and frustrating, before they started using Avo, of course.

“The problem with tracking mistakes,” says Yakovenko,“is that each fix takes time. It might take a month to roll out a fix for a single issue to all our applications and users.”

Fixing analytics issues didn’t just waste developer hours on the fix itself; it also delayed product decisions because insights are crucial to making the right product decisions.

Caring about data analytics implementation and testing best practices help prevent bad data and rework later on.

Analytics implementation and validation processes often aren’t something we consider when we think of testing code because it hasn’t traditionally been a part of our main codebase, nor is it part of our job to design. But caring about data analytics implementation and testing best practices helps prevent bad data and rework later on. As more businesses recognize the importance of online business as a primary revenue source, data insights are important as ever, and product analytics solutions like Amplitude and Mixpanel are becoming more critical.

When trying to implement analytics, many developers report:

- Incomplete implementation instructions

- Systems that were designed with data as an afterthought

- Long feedback loops of the correctness of their implementation

- Multiple streams of feedback from different stakeholders

On the testing side, we, and data teams, often don’t prioritize testing our code to make sure we’re not putting garbage into our data systems. Testing is time-expensive, complicated, and easy to get wrong — making it low priority. Low priority generally translates to no testing at all, which means that code either ships broken, breaks during a later update, or ships without analytics altogether 💀.

However, it’s important that we take an active role in analytics implementation and testing best practices to help make our data teams and our own workdays more efficient. After all, it’s much easier for us to fix data through testing these best practices at the source than it is to make corrections downstream, especially if we’ve already shipped.

Removing the frustration around data tasks, and recouping development time by tweaking these processes is valuable. Fixing bugs can take us as much as 30x the amount of time to fix later on, if not caught early. Developers within our own network report that up to 30% of issues for a single team are analytics bugs. We can slash that number down to size with these best practices in the bag.

Here’s how.

Five best practices for analytics implementation and testing

These five practices make your analytics tests and implementation more efficient and consistent. Your code can be implemented quickly, without taking shortcuts or trading accuracy for convenience. In other words, you’re beating the builder’s paradox. Think of the efficiency as the “human” process side to data testing and implementation. Easier replication of the same process or request while having clean code on the other side of that replication creates consistency. Think of the consistency as the “code” process side to analytics testing and implementation. Breaking best practices down into matters of efficiency and consistency means you’ll be able to reap the benefits of attending to analytics implementation and testing — less time spent on frustrating re-work and bugs, and more time spent on your regular development workflow.

1. Request full implementation specs every time

Request full implementation specs from your data team or product manager every time, so you know where the code goes in your codebase and what the goal of implementing it is.

The main obstacle to straightforward implementation is that we often get ill-defined data from our product managers. Sometimes, this results in stop-gap solutions that work “well enough” but require rework later on. Other times, it generates a lot of tiresome back and forth on how to implement:

- The devs handle implementation as they think it should be done.

- The PM comes back to the devs with something else that they’d prefer.

- The dev redoes the same work.

- The cycle repeats until mutual satisfaction/breakdown.

Holistic specs makes implementation and testing more efficient by preventing confusion around necessary actions. Everyone is clear from the jump, and no back-and-forth is needed.

Additionally, these guidelines for implementing your code help ensure that all tracking is consistent between multiple platforms each time.

2. Consolidate feedback into a single source of truth

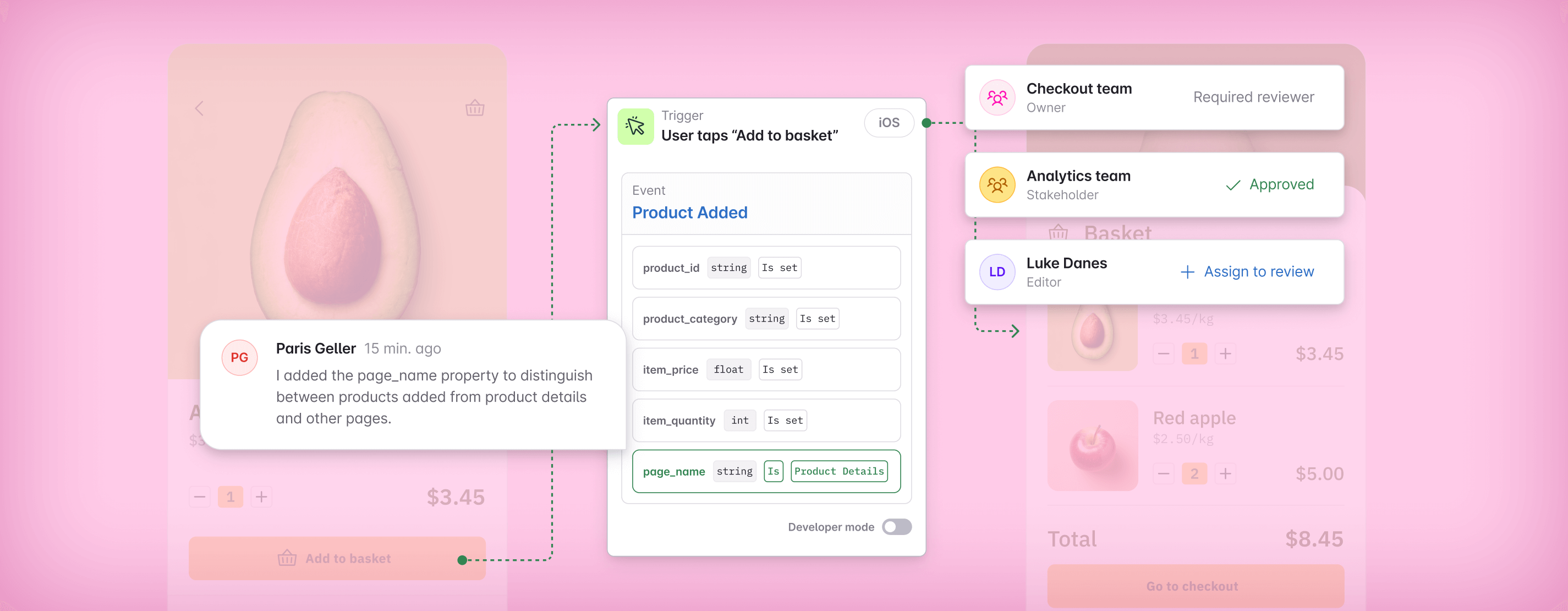

Consolidate feedback into a single source of data truth that the data team can comment on and answer questions around before the code goes to production. This involves the creation of a draft branch — or a draft version for JSON lovers — of your changes in your event analytics software or your tracking plan.

Consolidate feedback to ensure that it remains directed and issue-oriented. This also allows you to gather feedback in the specific context of the suggested changes. That way, everyone can see exactly what will be changed and can give feedback on it.

Feedback can come from anyone, other developers, data analysts, and product managers who all have something to say about your approach to data analytics implementation. This feedback is often ad hoc, making it difficult to track the narrative of the changes needed or questions asked. This is especially true if you don’t have a solid tracking plan and your teams are siloed.

When you consolidate feedback into a single source of truth it increases implementation and testing efficiency by creating a single environment (e.g., a shared doc or an easy-to-use tool like Avo 🙌) in which you can surface questions. Not only is it easier to have the relevant context then and there with descriptions, but your data team can be pulled in to answer any questions that might come up. When your data includes all relevant information that’s accessible to all stakeholders at any time, you can expect to see rapid turnaround on required changes and track any conversations about the particular changes made and the conversation about those changes.

Maintaining a single source of truth also helps consistency by giving you a chance to flag issues that arise during testing. Your data team, meanwhile, gets an equal chance to flag an issue with downstream data if/when they come across it.

3. Embrace versioning and test environments

Embrace versioning and test environments so you can test for analytics implementation errors before that code causes problems in your codebase out in the real world. Faulty code during the product cycle is bad enough, but faulty code in your released product can negatively affect customer success and, by extension, your business’ credibility. For example, if you’re in a commerce business, I can tell you that you will not want to be the developer on call (or on the hook!) for an analytics break during the Black Friday rush.😬

Development moves fast, and in the interest of time, testing environments and versioning for analytics specs are either non-existent or weak. Instead, the focus is squarely on getting to market in good time, i.e shipping fast. As a result, plenty of teams ship a product only to find out downstream that there’s an issue.

Devoting attention to versioning and test environments increases the efficiency of your implementation and testing by solving problems at the source. When you scan for errors at the source by using versioning and test environments that work by comparing your product against a golden/ideal dataset and a copy of your production dataset, you can clearly see where improvements are needed. You can make them in real time and then press on with development. So long, rework! 🐬

Following versioning and test environment best practices also helps with consistency by incorporating them into your standard product development cycle. It becomes the default. Having a set process for testing your code before it goes to production will help you avoid inconsistencies in implementation before it’s out in the real world. Or, as an Avo user puts it, “Fewer stupid errors with analytics.” As a result, you will consistently be able to release bug-free products that perform better, rather than chasing errors down the road.

4. Map data dependencies and lineages

Create an ecosystem for mapping downstream dependencies. Knowing how updates or changes will affect dependencies ensures fewer breaking changes are made. It will also create an environment that fosters communication between teams responsible for dependencies throughout the project.

You’ll gain a better understanding of why you’re implementing specific data, and you’ll have a higher stake in the success of capturing and maintaining that data. You’ll also know who to contact if any changes you make cause issues in an important metric or campaign.

Data implementation is often carried out by devs who have a lot of other demands on their time, so they optimize for getting it done. Code goes to production without checks. This is not only a problem in and of itself — as analytics tracking builds on inconsistent implementation, the quality of the data suffers.

When you have a better understanding of why data is being implemented, you can see how the work you’re doing is integral to heading off instances of bugs and misaligned code down the road. Not only will this cause less frustration for you, but your data team will thank you, as well as anyone who depends on the insights produced. This is anyone from marketing, product, even up to VP or executive level.

Mapping dependencies and lineages ahead of time cuts down on rework and headaches that are inevitable down the line when data quality practices are poor. You can paint a representative, upfront picture of what dependencies exist in your code. Testing will then reveal any issues arising as a result of those dependencies before you’re in production. Data produced this way is tracked and tested, and therefore correct, and uniform downstream.

5. Use the right tools to make your life easier

There’s a reason the next big thing in analytics is data governance. What has been a frustrating and error-prone process is now being solved with made-for-purpose analytics governance solutions. Instead of just using manual tests, finding the right data analytics tool can streamline your testing and data management and increase your data quality. This allows you to spend more time on the code you enjoy building, and less time squashing analytics bugs.

Avo is made so your data implementation is seamless. Your whole team has access to a single source of truth where data specialists can send clear, explicit implementation instructions to developers for each platform. Developers love it, as what that means in practice is: “Goodbye guesswork when implementing code!”

Using a type-safe tool like Avo increases your efficiency as you no longer need to write explicit data tests every time. Instead, you immediately see if the app is getting the expected data or not. Avo can be used in unit testing as part of your full test suite. This makes it easy for you to test analytics functionality without going too far out of your way. Here you can see an example of how to initialize Avo in a jest test environment with JavaScript.

Tools like Avo are great for your operation’s consistency. Avo’s type-safety means that, unlike a lot of other data analytics tools, you won’t have to troubleshoot your event names and metadata based on syntax. With Avo, you can trust that it’s right every time.

Data analytics implementation and testing best practices are important— and they don’t have to suck

Without any kind of optimization, analytics implementation and testing is a laborious and unappetizing process. By following implementation and testing best practices you’re saving yourself from a magnitude of data bugs and unexpected code re-work. Good news! There’s a tool to help you with this. 🥑

Having Avo in your tool stack minimizes the amount of valuable time required for implementation and testing episodes. It can eliminate the need for manual testing entirely. In a time when both quality and speed to market are more important than ever, trust you’re building a better product. Try Avo today to make analytics implementation a breeze for you and your team.

Block Quote